DLHUB is a powerful, intuitive platform that allows engineers, educators, and system integrators to design, train, and deploy deep learning models — without writing a single line of code.

DLHUB simplifies every step of the deep learning workflow. Whether you're building an image classifier, optimizing predictive models, or experimenting with neural network architectures, DLHUB lets you focus on outcomes — not algorithms.

No-Code, Engineer-Friendly Workflow

Build, train, and evaluate DL models using real-world data without coding or AI expertise, cutting down reliance on external AI consultants or complex toolchains.

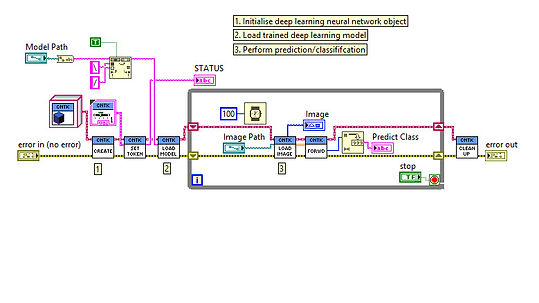

LabVIEW-Ready Deep Learning

Models can be exported and integrated directly into LabVIEW systems, making it easy to embed DL capabilities into your test, measurement, or automation setups.

Secure, On-Premise Training

All training runs locally, ensuring full control over your data and model IP — ideal for organizations with strict privacy, security, or compliance requirements.

How It Works

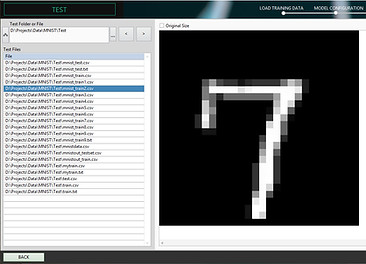

DLHUB supports different types of datasets, including text files, image files, and image folders. When a dataset is loaded into DLHUB, the data normalization procedure is automatically applied.

Key Features

No coding

DLHUB is specifically designed for engineers who do not have a profound knowledge of programming skills to design a proper deep-learning model in only a few simple clicks. It provides a graphical user interface (GUI) that makes it easy to select the appropriate components, configure the model, and train it.

✅ A library of pre-trained models that can be used as a starting point for transfer learning. This can save engineers a lot of time and effort in the model development process.

✅ A drag-and-drop interface that makes it easy to select and configure the different components of a deep learning model.

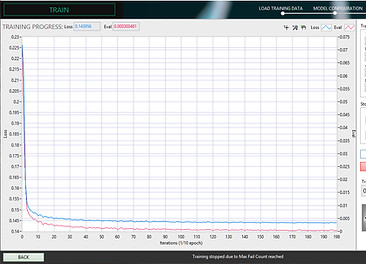

✅ A visual training dashboard that allows engineers to monitor the training process and to make adjustments to the model as needed. This can help to ensure that the model is trained properly.

✅ Automatically detects and enables NVIDIA GPU for the training process. This can significantly speed up the training process, especially for large models.

Multiple deep learning modules

DLHUB supports different types of deep learning modules that help users to create and customize their AI model, including:

✅ Conv2D

✅ Pool2D

✅ Dense

✅ Flatten

✅ Drop

✅ ResNet

✅ Activation

✅ Transfer learning

✅ Recurrent nodes

Training parameters

DLHUB supports the training of deep learning models with advanced algorithms, including:

✅ AdaDelta: an adaptive learning rate algorithm that is designed to be more robust to noisy gradients than other algorithms.

✅ AdaGrad: an adaptive learning rate algorithm that is designed to give more weight to recent gradients.

✅ FSAdaGrad: a variant of AdaGrad that is designed to be more efficient.

✅ Adam: a popular learning rate algorithm that combines the advantages of AdaGrad and RMSProp.

✅ RMSProp: a learning rate algorithm that is designed to be less sensitive to noise than other algorithms.

✅ MomentumSGD: a stochastic gradient descent algorithm that uses momentum to help the model converge faster.

✅ SGD: a simple stochastic gradient descent algorithm that is easy to implement

Model testing

DLHUB supports a built-in test interface that allows users to test the model performance before final deployment for production. Users can evaluate the trained model with a new dataset for instant feedback and make necessary adjustments.

Deploy model to LabVIEW

DLHUB allows you to export your models in .zip format. The zip file contains all the necessary information, such as the model's architecture, weights, and biases, to deploy in LabVIEW using ANSDL

Trusted by the global leading companies